Music video and artificial intelligence

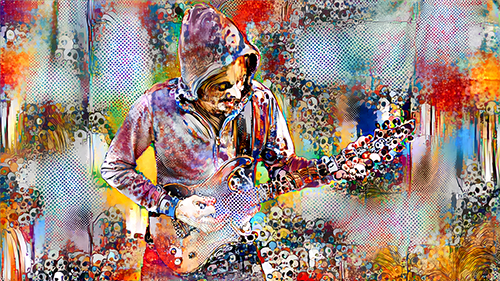

Harccore Anal Hydrogen is a music band that plays metal, electronic music, electro acoustic, trash, pop... Within this project, we always try to experiment new stuffs musically and visually.

February 2017 : We start playing with Google Deep Dream. We've already saw the hallucinating results of the dreams generated by this "artificial intelligence" and that's how we started digging into making a music video with this concept.

First, we've installed all the required libraries on a macbook to create our very firsts images (on a CPU). The first frames we've created took several minutes each, the definition was low, but that was amazing.

Note : These videos are maybe getting old quickly, but they were our inspiration for going deeper.

Deep Dream

How the AI (Artificial Intelligence) works seems complicated but it's not. There are 2 main steps : training, then exploiting. It's the same thing than learning an object to someone, let's say a chair. You show him many pictures of differents chairs. Then after learning it, when he'll see a chair, he'll be able to answer "it's a chair", even if it's a new one he's never saw.

With the "Deep Dream", the AI will dream over a picture you've loaded and draw shapes it has learned. If there are to dark dots on the pictures, it will draw 2 eyes and maybe a face etc...

The awesome part is that there are already trained models downloadable, you don't need to do the painful part of training to get started : https://github.com/BVLC/caffe/tree/master/models/bvlc_googlenet

You can still pimp some parameters :

- Layer : A neural network is made of several named layers. Each one has learned something different during the training. Then we can pass the name of the layer we want and it will produce dreams specific to that layer (see detail below)

- Iterations : the number of times the AI will draw its dream on your original picture.

Neural Style Transfer

The AI lets you apply a style to an image. It uses the data (shapes and colors) of the style-image and try to reproduce your original picture. Here is an example below with the original picture, the style, then the result :

How cool is that ! The possibilities are huge. We took the time and tried a lot of stuffs before producing something really exciting. You may know the successful application "Prisma", it uses the same technology.

Dozens of libraries are available on Github :

- anishathalye/neural-style : https://github.com/anishathalye/neural-style python - awesome lib, but a bit slow.

- jcjohnson/fast-neural-style : https://github.com/jcjohnson/fast-neural-style lua - very fast rendering but you need to train your model with each style. A good training can last several days, so not appropriated with our use case

- manuelruder/artistic-videos https://github.com/manuelruder/artistic-videos lua : excelent rendering and good performance, plus it's made for videos

Each lib has its own parameters but the must usual are :

- Style weight : How much the final image will look lije your style image

- Style size : Size of your style sampling

- Iterations : How many time your original image will be stylized.

- Original colors : Preserve the colors of your original image

OpticalFlow (DeepFlow)

Now we know how to make cool images, how do we do videos ?

Splitting the videos into frames and processing each image independently ?

No. It doesn't work.

The video blinks, each frame is processed independetly, the AI doens't take care of the previous image. Not very sexy

The DeepFlow/Deepmatching solves this problem : the frame is compared to the previous one to generate on optical flow information that is passed to the AI. The animation is then much more fluid, and it's not blinking anymore.

Reminder : we're playing hardcore metal. Probably, we'll move a lot. At 25 fps, the deepflow doens't understand well every quick moving. We had to shoot everything in 50 fps, so we'll be able to give 2x informations to the DeepFlow.

Hardware / GPU

If you deal with AI a lot, forget your CPU, you have to get a good GPU, and probably a Nvidia one.

At the moment, the most performent AI backend is CUDA, created by nvidia.

You'll also need a lot of RAM, a good CPU, a liquid cooling system and a solid power alimentation.

On system76 we've configured the required computer.

- 3.8 GHz i7-6800K

- 32 GB Quad Channel DDR4 at 2400MHz

- 12 GB Titan X with 3584 CUDA Cores

- 500 GB 2.5" SSD

- Liquid cooling

Shooting

On the exemple above, we see that the motions are creating "glitches" on the background, like if the character is glued into a viscous texture.

So we've tried to crop a sylized character

(ex : Jean Claude Van Damme →)

We've shoot the images with a green screen at Hello Production so the keying won't be a pain in the ass.

Montage

Artistic transfer

The video sequence is exported with HD format (1280x720). We choose the style we want to apply.

The lib manuelruder/artistic-videos os doing an excellent job :

- the video is splitted into frames (50 fps)

- the deepflow runs on the CPU, frame by frame

- in the same time, the style transfer is executed on the GPU, waiting for the deepflow frame by frame

CPU + GPU, everybody is working hard in the same time.

X 50 frames per second

X 5m20 (total video time)

= 33 days of rendering

Infinite zoom - DeepDream

Wth the deepdream librairy, we apply dreams on a frame, then we zoom in a bit. With this image we do the same thing. We repeat it as many time as we need to.

This creates an halucinating infinite zoom.

For some sequences (introduction), we've simply reversed the zoom.

It's possible to use different layers during the zoom to generate various hallucinations. Before doing it with random layer names, we have exported 1 frame per layer so we have an idea of how they look like.

The results below.

Image scale to QHD

The sylized images are upscaled with Waifu2x. We get a resolution of 2560x1440 without any ugly jpeg artifact. You can compare Waifu and Photoshop upscaling by clicking on the images below :

The frames are converted to a 50 fps video and re-imported to our video software.

Et voilà !

Improvements

User interface

The main improvement we can work on is the user interface. At the moment, we are using command lines in the terminal to run all these librairies.. not really user-friendly.

It's hard to debug a failure. Many days of rendering just failed with no apparent reason.

With the Studio Phebe's we'll develop an interface so anybody can use these librairies. It will be made with python and django and probably opensourced.

Grey frames on Deepflow

Sometimes, several stylized frames produce grey lines at the border of the picture. This is probably caused by the optical flow. We don't know very well why it occurs sometimes.

Workflow

To stylise a video, we need to process these 3 steps manually :

1. Neural style transfer 2. Upscaling 3. Convert into video

It would be more confortable to execute 1 command that does all the job from the original video to an upscaled stylized video.